BACKGROUND

The Heartbreaker Wall was a grad school project that asked students to re-examine personal rituals. In this case, I focused on the rituals associated with the end of a romantic relationship.

PROBLEM

After a break-up, people frequently go through a rite of "cleansing" themselves of their ex by disposing of artifacts from the relationship. People once had the option to burn, flush, crumple, tear up, or otherwise destroy photographs of their exes, but now that most of our photographs only exist digitally, it's difficult to garner any satisfaction from this act. Dragging an icon to the trash bin on a computer just isn't the same as watching an object go up in flames.

CONCEPT

The Heartbreaker Wall makes the ritual of purging photographs a more cathartic experience. The wall displays a montage of photographs along with a menu of virtual objects that can be "thrown" at the wall: paint, eggs, bombs, and rocks. Any physical object can be thrown at the wall, and the animation associated with the selected virtual object displays where the real object strikes the wall. [We used pillows and stuffed animals in the demo so as not to damage school property, but the experience works with any object.] A satisfying splat, crack, boom, or shattering noise is played upon impact, providing mourners with the notion that they've somehow inflicted an imaginary bit of pain upon their ex. In the ideal experience, I imagined displaying visual feedback that the photograph had been damaged or destroyed, as well as actually altering the source files on the OS level.

IMPLEMENTATION

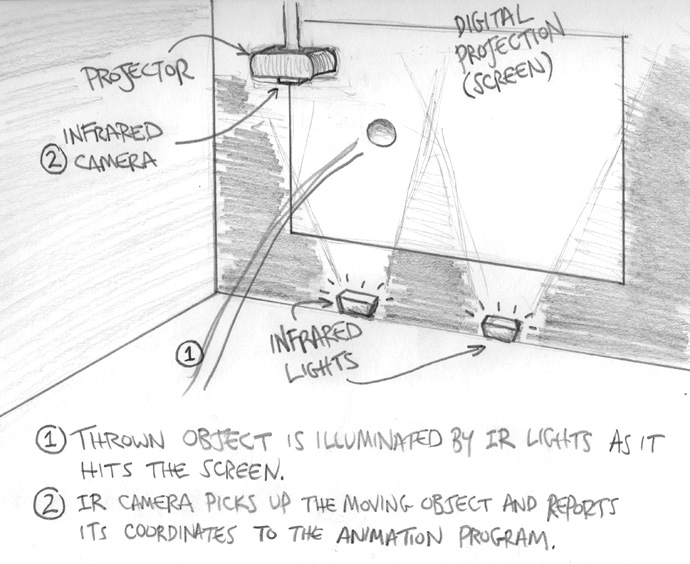

The installation uses of an infrared camera and lighting, which circumvents the problems associated with object detection in front projection systems. Because infrared light falls outside of the spectrum of visible wavelengths, the infrared camera doesn't "see" the digital projection on the wall, and instead recognizes objects which are illuminated by the infrared lights as they reach the wall. I used CCV computer vision software for object recognition, which is able to communicate information about detected objects via TUIO. I coded the interaction in Processing, which receives the TUIO data, and responds with the various animations and sounds.

Other Projects

AwayWeb homepage

Minibar DeliveryE-Commerce mobile app

KitchensurfingFood service mobile app

PocketMobile app onboarding

Thirty LabsBeta projects

Telecast (Betaworks)Video mobile app

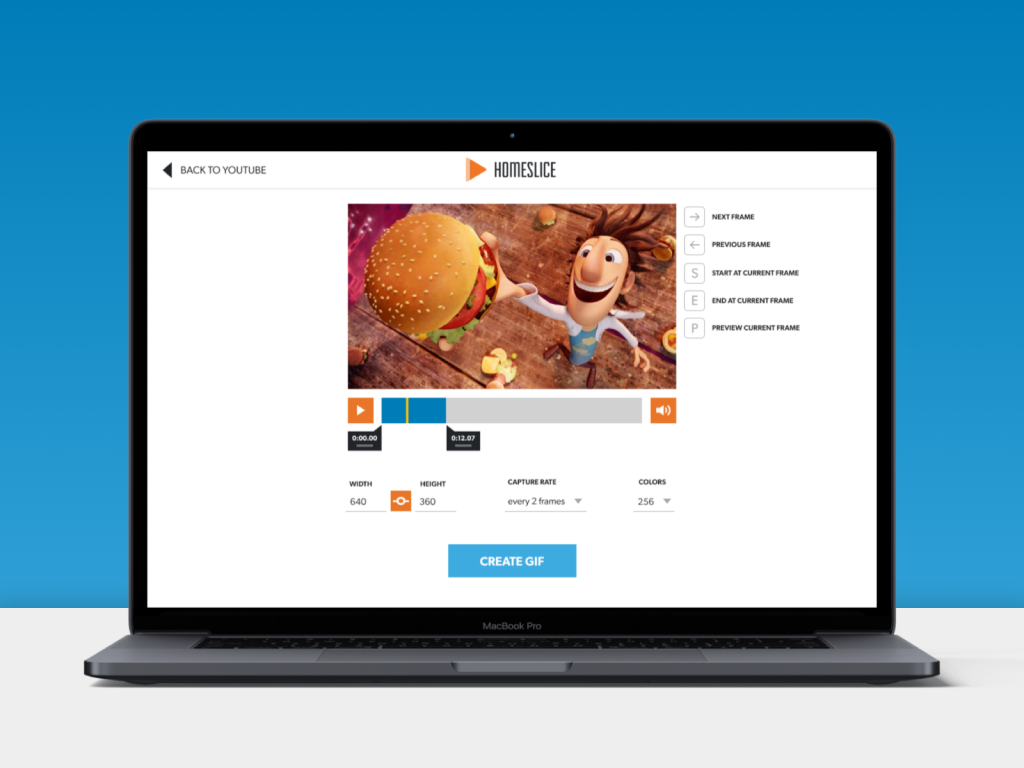

HomesliceGIF-creation extension for Google Chrome